The first artificial intelligence program was made in 1956. Since then, researchers from all over the world to enhance and make the technology more effective. As the tech gets more and more advanced the things it can do seems infinite. That may seem like a universally positive thing, but because the technology has the ability to learn, some people are questioning if artificial intelligence should be continued to be researched and progressed. If we look into what each side is saying we can try to deduce the values and implications that go along with what they believe.

Unbiased AI

In Dr Mark Van Rijmenam’s article Why We Should Be Careful When Developing AI, he says that AI is biased due to being made by biased humans. He also points out the flaws with the current AI and says that it deliberately caused harm such as privacy invading facial recognition cameras. Rijmenam also writes that he fears the creation of super AI which would have autonomous self-control and self-understanding and the ability to learn. He fears that the super AI would be able to manipulate humans and other AI to achieve dominance. He believes that instead of developing this AI at such an extreme rate we should be sure to monitor the behavior of the AI and be sure to keep it under control. He says that if super AI is created it will reshape the world to its own preferences. He goes on to say that we need to train these AI agents with unbiased info, so it doesn’t reshape the world to its biases. Another fear he has is that why can’t always know why some algorithms come to a certain outcome. He thinks that we should have a way to check why the AI made each decision in order to make sure it doesn’t become biased.

Clearly Rijmenam fears that AI will have biases that will shape the world and only benefit the things that the AI values. Obviously, this could be devastating for the group of people are that the Ai is not biased towards. He also values humanity and fears that if we are not careful AI will take over and humans will not be valued, and the computer will do whatever it believes is the most beneficial no matter the cost.

Neuralink

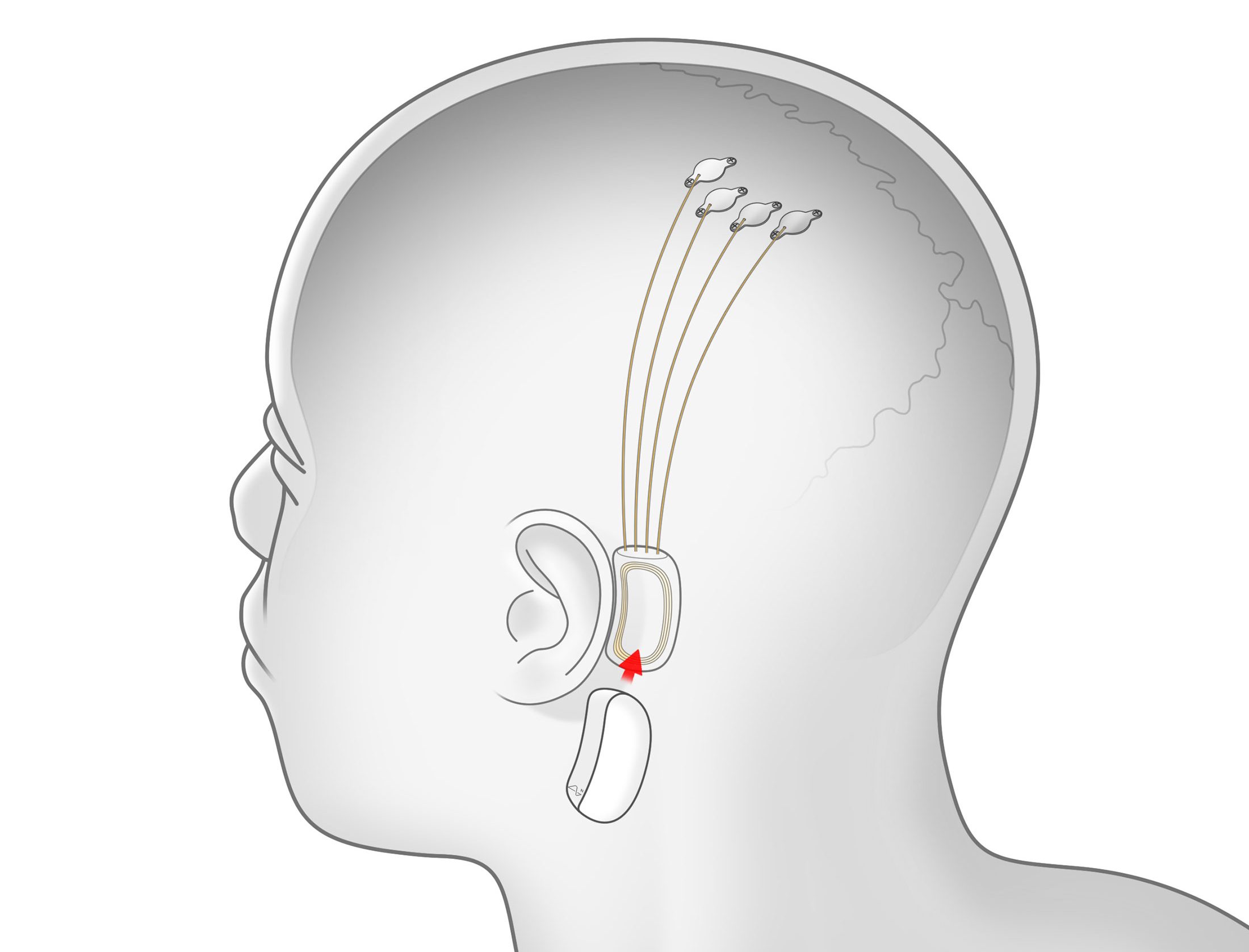

Elon musk has a different idea of how to handle AI. He believes that instead of trying to make sure that the AI is unbiased we can essentially combine with it by putting chips into our brains that connect us to the internet. Musk’s company Neuralink is already working on making this happen. Not only would the device connect us to AI, but it can also help people control prostatic limbs and many other applications. Although this may seem like it could never happen or that it won’t happen for many years’ musk is already experimenting with the technology in animals and recently live streamed a showing of the device in pigs. If this were to happen a total change would happen to the entire structure of American society. The device also brings into question if we will retain our humanity. It also brings into question what it means to have our humanity

If Neuralink comes to fruition it would cause a very big change in the we currently live. First, would it be up to each person to decide if they want to get the implant or not? Also, would it be up to the parent and or guardian to decide if their child should get the implant? There would be a lot that would have to be sorted out in that sense. Next, if everyone was connected to AI There would have to be a new way to pay for things or buy them. We would probably have to get rid of our current currency completely. This would happen because if half the population was walking around with super computers in their head, they would have all the knowledge that they need in their head. Also, what do the people who don’t get the implant do they always have less info because people with the computers in their heads are always connected. College would probably be rendered useless for most people as well. There would also be security issues because people will most likely try to hack into us.

Something interesting to consider is what these implants would have on how people interpret art and music. There is no way to tell if it will be any different, but I would imagine that we would essentially evolve to see art and music as just colors and we just wouldn’t be able to see It for what it is. Another feature that Nueralink would have is that we would be able to re watch experiences that we went through. This could be very interesting because human memory is inaccurate so if we could rewind our past experiences, we would know what truly happened. In the future they might find a way to record your emotions as well and when you re watch a past experience you get to feel the same emotions that you felt at the time. That brings into question though if our emotions would be the same after the tech.

Elon musk values the advancement of humans and fears a similar future that Rijenam fears where AI completely takes over a render’s humans useless. The difference in their thinking is that Musk’s can make people question if we are still the same creatures after getting this implant. This shows that must cares less about if we are the same creature and more about humankind surviving for as long as they can. Rijenam on the other hand defiantly cares more about what makes us human and morals. He cares more knowing the risk that failure could mean an end to mankind.

What is Humanity?

In Thomas Suddendorf’s, a Professor of Psychology at the University of Queensland, article he says that scholars like to say that what makes humans unique are things like language, foresight, mind-reading, intelligence, culture, or morality. He then points out that studies have shown that animals have demonstrated the same qualities so, he says, that is not what gives us our humanity? He gives an anecdote about a monkey that killed a 14-year-old boy and he joked about the monkey not having a trial for murder. He finishes his article by saying that what gives us our humanity is the ability to think about our consequences in the future and the results of them. If we go off what Suddendorf defines our humanity as, the nueralink would allow us to maintain our humanity but Rijenam also cares about our morals as well and there is no way to tell what the device will do to our morality and our values. I would imagine that Rijenam fears that our values would change too much and wouldn’t reflect the things he values and thinks should be valued.

Another thing to look at is the self-driving car. While making the car researchers had to look at every possible situation that could happen including accidents. They had to look at very hard situations such as what if the only option is to hit a dog or a person. What should it do? Also, who gets to decide. There are many things to consider when looking into this matter. An article from nature says that each country when asked these tough moral questions have different answers. One thing that most people agree on is that humans’ lives are more valuable than animals. AI would have to make these decisions as well and that brings up something very interesting. Rijenam wants the AI to be unbiased but because it was developed by humans won’t it be biased towards them?

Fast Moving AI

Either way, there is another horse in this race the people that think that Ai shouldn’t have to be monitored at all and should progress as fast as possible. In a Fast Company article John Pavlus says that AI should be able to progress as fast as possible because it will be able to make large advances in many fields. In the article Pavlus says that between 54% and 75% of people surveyed believe that Ai will help the rich and hurt the poor. From what I can gather from the article Pavlus has way more value for economics and money than both Musk and Rijenam. He does lightly address that he thinks the AI should be unbiased, but he doesn’t give any plans how and by saying that it should go as fast as possible it’s clear that he cares more if the AI does good for the economy than if it is unbiased.

Clearly, AI is a big part of what is going to happen in the future and there are many huge effects no matter what happens.For the most part all sides agree that if AI is used correctly it can be very beneficial to the world but as we look at each side and analyze what they value we can try to decide what is best.

Work Cited:

“Full Page Reload.” IEEE Spectrum: Technology, Engineering, and Science News, spectrum.ieee.org/the-human-os/biomedical/devices/elon-musk-neuralink-advance-brains-ai.

Get in touchDr Mark van RijmenamDr Mark van Rijmenam is Founder of Datafloq and Mavin.org. He is a highly sought-after international public speaker. “Why We Should Be Careful When Developing AI.” AI, Blockchain & Big Data Speaker on the Future of Work, 9 Oct. 2019, vanrijmenam.nl/we-should-be-careful-developing-ai/.

Maxmen, Amy. “Self-Driving Car Dilemmas Reveal That Moral Choices Are Not Universal.” Nature News, Nature Publishing Group, 24 Oct. 2018, http://www.nature.com/articles/d41586-018-07135-0.

Pavlus, John. “AI Is Moving Too Fast, and That’s a Good Thing.” Fast Company, Fast Company, 3 Dec. 2019, http://www.fastcompany.com/90429993/ai-is-moving-too-fast-and-thats-a-good-thing.

“What Makes Us Human?” Psychology Today, Sussex Publishers, 10 Mar. 2014, http://www.psychologytoday.com/us/blog/uniquely-human/201403/what-makes-us-human.